Published on 22 January 2025

The recent regulation of artificial intelligence (AI) proposed by the European Union marks a significant milestone in the management of technological progress. Known as the Artificial Intelligence Act, this regulation not only establishes a legal framework for the use and development of AI, but also underlines the importance of ethics and responsibility in an era dominated by algorithms and massive data. It is the first comprehensive law of its kind in the world and sets a high standard for the responsible development and use of AI.

AI has permeated almost every aspect of our daily lives, from healthcare to transport, commerce and communication. However, its rapid adoption and development has brought with it a number of ethical and social challenges. Issues such as algorithmic discrimination, lack of transparency, data privacy and the impact on employment are growing concerns. In light of this, the EU has decided to take a proactive stance.

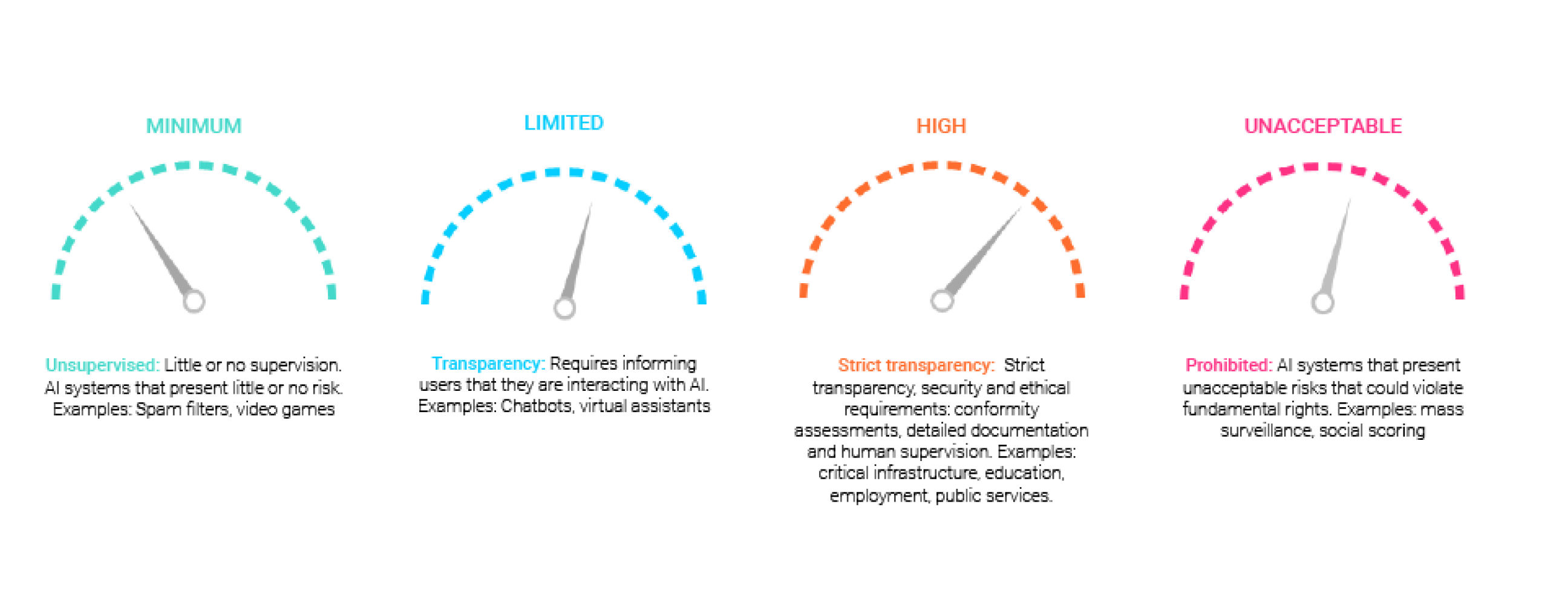

The Artificial Intelligence Regulation introduces a risk-based approach for the regulation of AI systems, categorising them into four levels: minimum, limited, high and unacceptable. This classification allows for proportionate regulation, focusing regulatory efforts on uses of AI that may have the most significant impact on people's rights and safety. The regulation prohibits certain uses of AI that are considered high-risk, such as mass surveillance and social scoring, which are practices that may violate citizens' fundamental rights. AI systems that are considered high-risk, such as those used in critical infrastructure, education, employment and public services, must comply with strict transparency, security and ethical requirements. These include compliance.

An essential element of the regulation is transparency. Users must be informed when they are interacting with an AI system, ensuring that they understand when their decisions or interactions are being mediated by algorithms. This is crucial to maintain trust and confidence when using these technologies.

To support this regulation, the already existing AI Liability Directive was introduced to assist in liability claims for damage caused by AI-enabled products and services. In addition, the creation of the EU IA Office aims to streamline the implementation of the rules. These components reinforce the EU's commitment to ensure that AI development is conducted in a safe and ethical manner, providing an accessible framework for businesses and users.

Despite its merits, the regulation faces criticism that the law does not provide sufficient clarity to companies on how to comply with the rules, which could lead to legal uncertainty and stifle innovation. In addition, assessment and compliance requirements can be cumbersome and costly, especially for small businesses and start-ups. There is also the idea that different levels of risk may lead to a fragmentation of the European market, with different rules in each country. Meanwhile, it is feared that countries such as the US and China that are investing heavily in AI development will take competitive advantage, with European regulation slowing down Europe's progress in this field, making it less competitive.

Another point of controversy is the definition of ‘AI’ in the law, which is considered too broad and could cover technologies that should not be subject to such strict regulations.

The ban on the use of AI for facial recognition in law enforcement has also been controversial, with arguments in favour of its potential to fight crime.

Despite these criticisms, regulation can also be seen as an opportunity to lead the way towards more ethical and responsible AI. By setting high standards of security and transparency, the European Union not only protects its citizens, but also promotes an environment of trust in technology. The balance between protection and progress is essential; an AI that respects human rights and acts in a transparent manner is not only fairer, but also more likely to be accepted and adopted by society as a whole.

The new regulation is the beginning of an ongoing dialogue on how to integrate AI into our lives in a safe and beneficial way. In a world where technology is advancing by leaps and bounds, the European Union has made it clear that progress must not come at the expense of fundamental values and human rights. While the new regulation is a positive step, there is still work to be done and loopholes to be filled, especially around large models that may lead to a monopoly of AI solutions in the market.

Private enterprise is the driving force behind the rapid evolution of AI, and the EU and public administrations need to rely on it to support growth. Public-private collaboration is crucial to drive innovation while maintaining ethical and safety standards.

Businesses and governments should work together to develop guidelines and best practices for the development and use of AI. We must also ensure that the law is effectively enforced and regularly reviewed to reflect the rapid developments taking place around this AI technology.

With this regulation, the European Union is laying the foundations for a future where technology and humanity can coexist harmoniously and to the benefit of all, but it is only the beginning.

#AI #EuropeanRegulation #ArtificialIntelligence #Future #Technology#GenAI#LLM#FM